Insight and analysis on the information technology space from industry thought leaders.

Agentic RAG vs. Traditional RAG: Which Improves AI Capabilities More?Agentic RAG vs. Traditional RAG: Which Improves AI Capabilities More?

Traditional RAG links LLMs with enterprise data to enhance AI outcomes. Agentic RAG improves on this by employing intelligent agents to process complex queries across multiple sources.

September 10, 2024

.jpg?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

Written by Max Vermeir, Senior Director of AI Strategy at ABBYY

Before, there was RAG, and now there is agentic RAG – the latest technology promising to enhance AI capabilities. But what is agentic RAG, and what sets it apart from traditional RAG?

Let’s define Retrieval Augmented Generation.

You may be hearing more about RAG if you’re in the artificial intelligence (AI) space and specifically diving into how large language models (LLMs) can help your organizations. LLMs have gained more attention due to generative AI applications such as OpenAI’s GPT-4, Google’s Gemma, IBM Watsonx.ai, and others for their ability to create, answer, or summarize queries based on vast amounts of publicly available data.

The challenge with LLMs is that the data outcome is not always accurate or relevant for enterprise use. It requires more context with business data to make it useful for specific business process applications. IT professionals within organizations need an easy way to connect their data stored in databases and documents with LLMs’ capabilities – and that is what RAG is: the bridge or connector between LLMs and your enterprise knowledge to create your own private LLM.

Using RAG with LLMs is a game changer for industries that rely heavily on documents stored in various databases, such as healthcare, financial services, and transportation and logistics. The information contained within repositories is critical to improving customer outcomes, operational excellence, and cost efficiency.

For example, healthcare practitioners use RAG with LLMs to improve patient outcomes by leveraging biomedical data to accelerate drug discovery and clinical trial screening. Retrieving relevant information from knowledge sources such as databases, scientific literature, and patient records augments the LLM to generate more accurate and contextually relevant outputs while limiting hallucinations and outdated information.

The financial services industry is elevating the customer experience by providing more timely and accurate advice to clients. It’s an industry with rapidly evolving regulations and market trends. It is leveraging RAG and LLMs to dynamically integrate up-to-the-minute financial regulations, market analyses, and organizational insight in AI responses. This enables financial advisory chatbots and other AI-driven customer service tools to deliver more current and authoritative information.

Now let’s look at agentic RAG. The main difference between traditional RAG systems and agentic RAG is that the former relies on singular queries to generate responses. Agentic RAG can process complex queries using “intelligent agents” to cross-reference and retrieve information from multiple sources, verify data, and use multi-step reasoning to generate even more precise and contextually relevant outputs.

Digging deeper into traditional RAG’s shortfall, it often operates statically – unable to adapt dynamically to new information or evolving needs. Also, as the volume and diversity of data grows, scaling operations becomes a challenge. However, the largest challenge for wide enterprise use is the inability to process multifaceted queries.

Agentic RAG turbocharges conventional retrieval systems by enhancing efficiency, accuracy, and adaptability in processing complex queries. It does this by using enhanced retrieval techniques. These techniques include sophisticated reranking algorithms to refine search precision, hybrid search methodologies using keyword-based and semantic search, and semantic caching to reduce computational costs and improve response times.

Its effectiveness is also enabled by multimodal integration. By incorporating images, audio, and other data types, the system can develop a more holistic understanding of queries to deliver more accurate and relevant responses. Alongside the ability to retrieve from multiple data modalities, agentic RAG evaluates and verifies data quality using filtering mechanisms that identify and exclude unreliable or low-quality information.

Further setting agentic RAG ahead of traditional RAG is the agents’ ability to leverage various search engines and APIs to enhance their information-gathering capabilities. API integration to external and internal sources is essential to accessing real-time data.

However, there is one challenge that both traditional RAG and agentic RAG share: access to your organization’s data.

There are three main steps to make your enterprise data available to agentic RAG and LLMs: digitize your documents, extract and structure the data for AI consumption, and use an API to connect the structured data with the RAG or LLM system.

Most companies use an OCR solution to transform physical documents into digital formats. More advanced companies incorporate AI with OCR for intelligent document processing to achieve higher accuracy on the text and structure of the document when converted.

Once digitized, preparing your data for RAG requires more use of AI to clean and annotate data streams, learn to extract specific information automatically, and use natural language processing (NLP) to ensure the data is accurate and contextually rich. This preparation enhances your data’s quality so that the information RAG pulls from is as accurate and bias-free as possible.

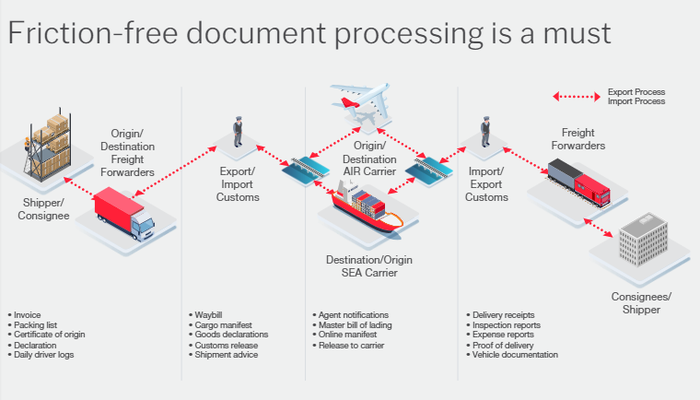

A scenario that best illustrates the digitization of information for agentic RAG and LLM is the logistics industry, where 90% of the documents are unstructured and handwritten. From start to finish, shippers process invoices, packing lists, certificates of origin, and daily driver logs. As a shipment goes through customs, waybills, cargo manifests, customs releases, and goods are declared or collected. Once a shipment arrives at a destination, there are agent notifications, master bills of lading, and release of carriers.

When goods reach their final destination, delivery receipts, inspection receipts, expense reports, proofs of delivery, and vehicle documentation must be processed. All this information is stored within various databases by multiple stakeholders. Using APIs to connect to logistic providers’ systems, intelligent agents can retrieve relevant information from different knowledge bases to deliver business-critical insights that will improve demand forecasting, route optimization, warehouse management, predictive maintenance for fleets, and more precise delivery estimations.

IT professionals are tasked with identifying the best way to leverage AI within their organizations. You don’t want to be among the 60% of decision-makers who use AI simply out of FOMO or jump into the most headline-grabbing generative AI model. Once you understand the opportunities with LLMs and the benefits of RAG, agentic RAG will be a game changer for driving operational efficiency company-wide.

The following image illustrates the last use case scenario:

Agentic RAG with LLMs can be used in document processing throughout the shipping process to accelerate outcomes. Source: ABBYY

About the Author

Max Vermeir is Senior Director of AI Strategy at global intelligent automation company ABBYY. With a decade of experience in product and tech, Maxime is passionate about driving higher customer value with emerging technologies across various industries. His expertise from the forefront of artificial intelligence enables powerful business solutions and transformation initiatives through LLMs and other advanced applications of AI.

About the Author

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)