T-SQL Puzzle Challenge: Grouping Connected ItemsT-SQL Puzzle Challenge: Grouping Connected Items

The challenge involves an undirected cyclic graph that represents pairs of connected nodes that have some kind of a relationship between them. The goal is to group all nodes that are connected either directly or indirectly (transitively).

November 27, 2017

My friend and fellow Data Platform MVP Davide Mauri sent me an interesting puzzle that involves grouping connected items. He had a specific application in mind that was relevant to Sensoria—the startup company he works for—but the need is common and applicable to many other use cases. In this article I present the challenge and provide four different T-SQL solutions, describing their performance and scaling characteristics. You can find a CLR UDA solution with impressive performance written by Davide in his blog entry on the topic.

Thanks, Davide, for the nice puzzle and for the back and forth communications we had discussing the solutions!

The Challenge

The challenge involves an undirected cyclic graph that represents pairs of connected nodes that have some kind of a relationship between them. In Davide’s case, each node represents a running/jogging session, and an edge connecting two nodes means that there’s a certain minimal degree of similarity between those sessions. The goal is to group all nodes that are connected either directly or indirectly (transitively). Figure 1 illustrates the task.

Figure 1 - Grouping connected items

Notice in this example that there’s no direct connection between nodes 17 and 25, but they are connected indirectly, or transitively, via the path 17-18-25, so they are part of the same group.

The input graph is represented by a table (say we call it T1) with the edges. Here are the edges from the graph shown in Figure 1:

id1 id2--- ---17 1817 2418 2418 2524 2589 90 The output should be the individual node ids, each assigned with a common group identifier; for example, you can use the minimum node id within the group as the group identifier. Here’s the desired output for the graph shown in Figure 1:

id grp---- ----17 1718 1724 1725 1789 8990 89Another use case could be identifying groups of products that are purchased together to determine their placement in stores/super markets.

Sample Data

Use the following code to create the table T1 and populate it with a small set of sample data, representing the graph shown in Figure 1:

SET NOCOUNT ON;USE tempdb; DROP TABLE IF EXISTS dbo.T1;GOCREATE TABLE dbo.T1( id1 INT NOT NULL, id2 INT NOT NULL, CONSTRAINT PK_T1 PRIMARY KEY (id1, id2), CONSTRAINT CHK_T1_id1_LT_id2 CHECK (id1 < id2) -- since graph is undirected no need to keep both (x, y) and (y, x)); -- need index for performance of some of the solutionsCREATE UNIQUE NONCLUSTERED INDEX idx_id2_id1 ON dbo.T1(id2, id1);GO -- Small set of sample dataTRUNCATE TABLE dbo.T1; INSERT INTO dbo.T1 VALUES (24, 25), (89, 90), (17, 24), (18, 24), (18, 25), (17, 18);Notice that a CHECK constraint verifies that id1 is smaller than id2. This is done since the graph is undirected, to avoid redundancy. The undirected edge 17-18 represents two directed edges: 17->18 as well as 18->17, so there’s no need to store both in the table. If you want to allow inserting rows where id2 > id1, but still store them with id1 holding the smaller value and id2 the greater one to make sure you reject duplicates, you can have an instead of trigger swapping the ids in such a case:

DROP TRIGGER IF EXISTS trg_T1_IOINS_id1_LT_id2;GOCREATE OR ALTER TRIGGER trg_T1_IOINS_id1_LT_id2 ON dbo.T1 INSTEAD OF INSERTAS IF @@ROWCOUNT = 0 RETURN;SET NOCOUNT ON; INSERT INTO dbo.T1(id1, id2) SELECT CASE WHEN id1 < id2 THEN id1 ELSE id2 END AS id1, CASE WHEN id1 < id2 THEN id2 ELSE id1 END AS id2 FROM inserted;GO TRUNCATE TABLE dbo.T1; INSERT INTO dbo.T1 VALUES (25, 24), -- will be swapped (90, 89), -- will be swapped (17, 24), (18, 24), (18, 25), (18, 17); -- will be swapped

Use the small set of sample data to test the logic and correctness of your solutions. To test the performance and scaling of your solutions, you will need larger sample data.

Use the following code to create the helper function GetNums, which returns a sequence of integers in the requested range:

DROP FUNCTION IF EXISTS dbo.GetNums;GO -- Helper function dbo.GetNumsCREATE FUNCTION dbo.GetNums(@low AS BIGINT, @high AS BIGINT) RETURNS TABLEASRETURN WITH L0 AS (SELECT c FROM (SELECT 1 UNION ALL SELECT 1) AS D(c)), L1 AS (SELECT 1 AS c FROM L0 AS A CROSS JOIN L0 AS B), L2 AS (SELECT 1 AS c FROM L1 AS A CROSS JOIN L1 AS B), L3 AS (SELECT 1 AS c FROM L2 AS A CROSS JOIN L2 AS B), L4 AS (SELECT 1 AS c FROM L3 AS A CROSS JOIN L3 AS B), L5 AS (SELECT 1 AS c FROM L4 AS A CROSS JOIN L4 AS B), Nums AS (SELECT ROW_NUMBER() OVER(ORDER BY (SELECT NULL)) AS rownum FROM L5) SELECT TOP(@high - @low + 1) @low + rownum - 1 AS n FROM Nums ORDER BY rownum;GO

Use the following code to populate the table with the desired minimum number of groups and rows per group:

TRUNCATE TABLE dbo.T1;

-- num rows = @minnumgroups * @rowspergroupDECLARE @minnumgroups AS INT = 1000, @rowspergroup AS INT = 100; INSERT INTO dbo.T1(id1, id2) SELECT id1, id1 + ABS(CHECKSUM(NEWID())) % (@rowspergroup + 1 - R.n) + 1 AS id2 FROM dbo.GetNums(1, @minnumgroups) AS G CROSS JOIN dbo.GetNums(1, @rowspergroup) AS R CROSS APPLY ( VALUES( (G.n - 1) * (@rowspergroup + 1) + R.n ) ) AS D(id1);

Assign your own values to the variables @minnumgroups and @rowspergroup as needed. For example, to test how your solution scales with respect to the number of groups, test it with different @minnumgroups values, keeping @rowspergroup unchanged. To test how your solution scales with respect to the group size, test it with different @rowspergroup values, keeping @minnumgroups unchanged.

As always, if you like T-SQL puzzles, you would probably want to try and come up with your own solutions before looking at mine. Good luck!

Solution 1: Transitive closure with table variable

The first solution that I’ll present involves computing the transitive closure of our input graph. Given an input graph G (represented by our table T1), the transitive closure of G is a new directed graph TC holding an edge for every pair of nodes that have a path between them, whether directly or transitively. Figure 2 illustrates the transitive closure of our sample input graph, using red arrows to represent the transitive closure’s directed edges.

Figure 2 - Transitive Closure

I’ll first provide a solution for producing the transitive closure of the graph stored in T1, and then I’ll show how you handle our task of grouping connected items based on it.

The following code creates a multi-statement TVF called T1TC that returns a table variable (@T1TC) with the transactive closure of T1:

DROP FUNCTION IF EXISTS dbo.T1TC;GOCREATE OR ALTER FUNCTION dbo.T1TC() RETURNS @T1TC TABLE( id1 INT NOT NULL, id2 INT NOT NULL, PRIMARY KEY (id1, id2))ASBEGIN DECLARE @added as INT; INSERT INTO @T1TC(id1, id2) SELECT id1, id2 FROM dbo.T1; SET @added = @@ROWCOUNT; INSERT INTO @T1TC(id1, id2) SELECT id2, id1 FROM dbo.T1 SET @added += @@ROWCOUNT; WHILE @added > 0 BEGIN INSERT INTO @T1TC(id1, id2) SELECT DISTINCT TC.id1, T1.id2 FROM @T1TC AS TC INNER JOIN dbo.T1 ON T1.id1 = TC.id2 WHERE NOT EXISTS (SELECT * FROM @T1TC AS TC2 WHERE TC2.id1 = TC.id1 AND TC2.id2 = T1.id2) AND TC.id1 <> T1.id2; SET @added = @@ROWCOUNT; INSERT INTO @T1TC(id1, id2) SELECT DISTINCT TC.id1, T1.id1 FROM @T1TC AS TC INNER JOIN dbo.T1 ON T1.id2 = TC.id2 WHERE NOT EXISTS (SELECT * FROM @T1TC AS TC2 WHERE TC2.id1 = TC.id1 AND TC2.id2 = T1.id1) AND TC.id1 <> T1.id1; SET @added += @@ROWCOUNT; END RETURN;ENDGOThe code starts by writing to @T1TC an edge for every direct path between two nodes from T1. One INSERT statement writes all edges representing the paths id1->id2, and a second INSERT statement writes all edges representing the paths id2->id1. Then the code enters a loop that keeps going as long as the last step found at least one new edge. In each iteration of the loop, the code uses one INSERT statement to add to @T1TC new edges (x, z) where (x, y) is present in @T1TC, and (y, z) is present in T1, and another INSERT statement to add to @T1TC new edges (x, z) where (x, y) is present in @T1TC, and (z, y) is present in T1. These two statements ensure that we traverse the graph in both left and right directions to find new transitive connections: @T1TC.id1->@T1TC.id2=T1.id1->T1.id2, hence @T1TC.id1->T1.id2, and @T1TC.id1->@T1TC.id2=T1.id2->T1.id1, hence @T1TC.id1->T1.id1.

Run the following code to test the function against the small input set of sample data:

SELECT id1, id2FROM dbo.T1TC();

You get the following output representing the transitive closure of the graph in T1:

id1 id2---- ----17 1817 2417 2518 1718 2418 2524 1724 1824 2525 1725 1825 2489 9090 89

For any node x, the transitive closure will have an edge (x, ) for every other node that is part of the same group. This, of course, includes the edge (x, ). The one exception is for x = the transitive closure does not have an edge (x, x). So, to produce the group identifier, you group the data by id1 and return id1 as the id, and as the group identifier the minimum of id1 and MIN(id2). The following query implements this logic:

SELECT id1 AS id, (SELECT MIN(id) FROM ( VALUES(id1),(MIN(id2)) ) AS D(id)) AS grpFROM dbo.T1TC()GROUP BY id1;

This query generates the desired output:

id grp

---- ----

17 17

18 17

24 17

25 17

89 89

90 89

It’s always great to see that your solution returns the correct result. Unfortunately, though, it doesn’t perform very well. Running the solution after populating T1 with 1 group with 100 rows (@minnumgroups = 1, @rowspergroup = 100), this solution took 40 seconds to complete on my laptop. That’s a tiny table, so why such bad performance? The reason for the inefficiency is the fact that the code uses a table variable in the multi-statement TVF, and the lack of density vectors and distribution statistics results in bad cardinality estimates, which lead to suboptimal choices. Figure 3 shows the estimated versus actual plans (using SentryOne’s Plan Explorer) for the last iteration of inner query 1 in the loop’s body, and Figure 4 shows those for inner query 2.

Figure 3 - Plan for solution 1 inner query 1

Figure 4 - Plan for solution 1 inner query 2

Notice in both cases the gross inaccuracies of the cardinality estimates. For example, a nested loops algorithm makes sense for both the join between @T1TC with T1 and for the anti-semi join that handles the NOT EXISTS predicate only when the number of rows involved is very small. That’s what the estimates assume, but not the case in practice. When the number of rows is not very small, a hash join algorithm does much better in both cases. Also, notice in the second query that the loop handling the anti-semi join ended up scanning dozens of millions of rows in total from @T1TC due to the many repeated scans of the table variable. In short, a table-variable based solution isn’t a good choice for this algorithm.

Solution 2: Transitive closure with temp table

Solution 2 implements the same algorithm as solution 1, only it uses a temporary table called #T1TC instead of the table variable @T1TC. Since user-defined functions don’t support temporary tables, you need to implement the solution either in a stored procedure or as an ad-hoc batch. The following code demonstrates the latter:

SET NOCOUNT ON;USE tempdb;GO CREATE TABLE #T1TC( id1 INT NOT NULL, id2 INT NOT NULL, PRIMARY KEY (id1, id2)) DECLARE @added as INT; INSERT INTO #T1TC(id1, id2) SELECT id1, id2 FROM dbo.T1; SET @added = @@ROWCOUNT; INSERT INTO #T1TC(id1, id2) SELECT id2, id1 FROM dbo.T1 SET @added = @added + @@ROWCOUNT; WHILE @added > 0 BEGIN INSERT INTO #T1TC(id1, id2) SELECT DISTINCT TC.id1, T1.id2 FROM #T1TC AS TC INNER JOIN dbo.T1 ON T1.id1 = TC.id2 WHERE NOT EXISTS (SELECT * FROM #T1TC AS TC2 WHERE TC2.id1 = TC.id1 AND TC2.id2 = T1.id2) AND TC.id1 <> T1.id2; SET @added = @@ROWCOUNT; INSERT INTO #T1TC(id1, id2) SELECT DISTINCT TC.id1, T1.id1 FROM #T1TC AS TC INNER JOIN dbo.T1 ON T1.id2 = TC.id2 WHERE NOT EXISTS (SELECT * FROM #T1TC AS TC2 WHERE TC2.id1 = TC.id1 AND TC2.id2 = T1.id1) AND TC.id1 <> T1.id1; SET @added = @added + @@ROWCOUNT;END SELECT id1 AS id, (SELECT MIN(id) FROM ( VALUES(id1),(MIN(id2)) ) AS D(id)) AS grpFROM #T1TCGROUP BY id1; DROP TABLE #T1TC; Execution times for solution 2 are significantly better than those for solution 1. For example, with one group with 100 rows, solution 2 completes in less than 1 second, compared to the 40 seconds it took solution 1 to complete. Figures 5 and 6 compare the estimated and actual query plans for the last execution of the loop’s inner queries 1 and 2, respectively.

Figure 5 - Plan for solution 2 inner query 1

Figure 6 - Plan for solution 2 inner query 2

Notice how much more accurate the cardinality estimates are this time, and the fact that the optimizer chose hash joins instead of loop joins for both the join between the temp table and T1, and for the anti-semi join used to handle the NOT EXISTS predicate.

Solution 2 is indeed much more efficient than solution 1, but it still has a performance shortcoming. Given a group of N items, the transitive closure of such a group has (1 + 2 + … + N-1) x 2 = N2 - N edges. For example, with 10 items, the transitive closure has 90 edges. With 100 items, 9,900. With 1,000 items, 999,000 edges. In other words, the number of edges grows exponentially with respect to the number of items. This means that the solution cannot really scale well. Figure 7 shows the run time of this solution in seconds for one group with increasing numbers of rows from 100 to 1,000.

Figure 7 – Performance of solution 2 with respect to group size

So unless you’re dealing with very small groups, solution 2 isn’t really a good option.

Solution 3: Unfolding connected nodes

Solution 2 first expanded all possible connections between pairs of nodes in a group by creating the transitive closure and then collapsed those to compute the group identifier. This resulted in exponential scaling. Solution 3 avoids such an expand-collapse approach. Instead, it processes one group at a time, starting with one pair (the TOP (1) unprocessed pair ORDER BY id1, id2), and then unfolds the connections like you unfold a parent-child hierarchy in both left and right directions. The value of id1 from the group’s first pair is used as the group identifier. The solution keeps writing newly found nodes along with the group identifier (id1 value from first pair) into a temporary table called #G, using a NOT EXISTS predicate to verify that it only adds unprocessed nodes. Here’s the complete solution’s code:

SET NOCOUNT ON;USE tempdb;GO CREATE TABLE #G( id INT NOT NULL, grp INT NOT NULL, lvl INT NOT NULL, PRIMARY KEY NONCLUSTERED (id), UNIQUE CLUSTERED(lvl, id)); DECLARE @lvl AS INT = 1, @added AS INT; INSERT INTO #G(id, grp, lvl) SELECT A.id, A.grp, @lvl AS lvl FROM ( SELECT TOP (1) id1, id2 FROM dbo.T1 ORDER BY id1, id2 ) AS D CROSS APPLY ( VALUES(id1, id1),(id2, id1) ) AS A(id, grp); SET @added = @@ROWCOUNT; WHILE @added > 0BEGIN WHILE @added > 0 BEGIN SET @lvl += 1; INSERT INTO #G(id, grp, lvl) SELECT DISTINCT T1.id2 AS id, G.grp, @lvl AS lvl FROM #G AS G INNER JOIN dbo.T1 ON G.id = T1.id1 WHERE lvl = @lvl - 1 AND NOT EXISTS ( SELECT * FROM #G AS G WHERE G.id = T1.id2 ) SET @added = @@ROWCOUNT; INSERT INTO #G(id, grp, lvl) SELECT DISTINCT T1.id1 AS id, G.grp, @lvl AS lvl FROM #G AS G INNER JOIN dbo.T1 ON G.id = T1.id2 WHERE lvl = @lvl - 1 AND NOT EXISTS ( SELECT * FROM #G AS G WHERE G.id = T1.id1 ); SET @added += @@ROWCOUNT; END; INSERT INTO #G(id, grp, lvl) SELECT A.id, A.grp, @lvl AS lvl FROM ( SELECT TOP (1) id1, id2 FROM dbo.T1 WHERE NOT EXISTS ( SELECT * FROM #G AS G WHERE G.id = T1.id1 ) ORDER BY id1, id2 ) AS D CROSS APPLY ( VALUES(id1, id1),(id2, id1) ) AS A(id, grp); SET @added = @@ROWCOUNT;END; SELECT id, grpFROM #G; DROP TABLE #G;

Figure 8 shows the performance of this solution with respect to the group size.

Figure 8 – Performance of solution 3 with respect to group size

As you can see, this solution scales linearly with respect to group size, and can process much bigger groups compared to solution 2.

However, when you increase the number of groups, keeping the group size the same, the run time increases quite drastically in an extra linear fashion (detailed performance graph provided later in Figure 11). For example, following are run times for three executions of solution 3 with varying numbers of groups:

100 groups of 100 rows each: 1 second

500 groups of 100 rows each: 18 seconds

1000 groups of 100 rows each: 71 seconds

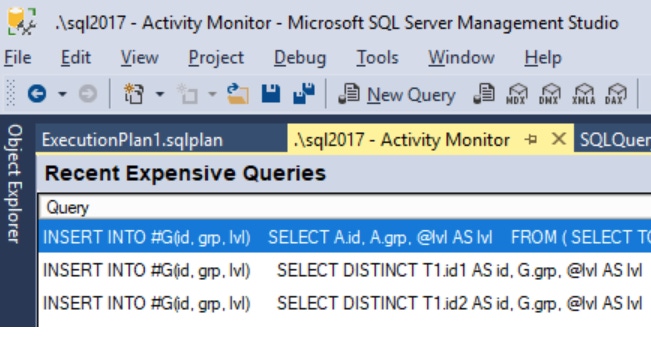

After running solution 3 with a large number of groups, inspect the Recent Expensive Queries section in Activity Monitor. Figure 9, shows the screenshot of this section in my machine.

Figure 9 - Activity Monitor - Recent Expensive Queries

The culprit that consumes most resources seems to be the highlighted TOP (1) query. This isn’t the first TOP (1) query from solution 3; rather, it's the one at the end of the outer loop. The first TOP (1) query identifies the first pair in the first group (based on id1, id2 ordering). It doesn’t have a WHERE clause since no nodes were processed yet. It’s a very cheap query.The TOP (1) query at the end of the outer loop (the one identified as expensive by Activity Monitor) needs to find the first pair of the next unprocessed group, and therefore it uses a NOT EXISTS predicate to ensure it evaluates only rows where id1 isn’t present in #G. The problem with this query is that as you advance in the groups, it scans increasing numbers of rowsin T1 until it finds the first unprocessed one. Right-click the TOP (1) query in Activity Monitor, choose Show Execution Plan, and then open this plan in SentryOne’s Plan Explorer. Figure 10 shows the plan that I got.

Figure 10 – Plan for TOP (1) query in solution 3

Plan Explorer smartly warns you that, despite the fact that the query is expected to return only one row, the operation may cause residual IO, with 100,000 rows estimated to be read. This number, of course, depends on how many groups and the group size you chose to use in your test. The point is, this solution doesn’t scale well when you increase the number of groups.

Solution 4: Unfolding connected nodes with deletion of processed edges

To circumvent the shortcoming of solution 3 and avoid the residual IO, solution 4 first copies the contents of T1 into a temporary table called #T1, and, in each round, deletes the processed pairs. To process the current round’s pairs representing either connections to the left or to the right, a DELETE statement deletes those from #T1, and uses an OUTPUT clause to write the connected ids into a table variable called @CurIDs. The ids from @CurIDs that don’t yet exist in #G are then written into #G with the current group’s group identifier. This means that the TOP (1) query at the end of the outer loop, which identifies the first row in the next group, doesn’t need a WHERE clause and touches only one row. There’s no residual IO. Here’s the complete solution’s code:

SET NOCOUNT ON;USE tempdb;GO SELECT id1, id2INTO #T1FROM dbo.T1; CREATE UNIQUE CLUSTERED INDEX idx_id1_id2 ON #T1(id1, id2);CREATE UNIQUE NONCLUSTERED INDEX idx_id2_id1 ON #T1(id2, id1); CREATE TABLE #G( id INT NOT NULL, grp INT NOT NULL, lvl INT NOT NULL, PRIMARY KEY NONCLUSTERED (id), UNIQUE CLUSTERED(lvl, id)); DECLARE @lvl AS INT = 1, @added AS INT, @id1 AS INT, @id2 AS INT;DECLARE @CurIds AS TABLE(id INT NOT NULL); SELECT TOP (1) @id1 = id1, @id2 = id2FROM #T1ORDER BY id1, id2; SET @added = @@ROWCOUNT; WHILE @added > 0BEGIN INSERT INTO #G(id, grp, lvl) VALUES (@id1, @id1, @lvl), (@id2, @id1, @lvl); DELETE FROM #T1 WHERE id1 = @id1 AND id2 = @id2; WHILE @added > 0 BEGIN SET @lvl += 1; DELETE FROM @CurIds; DELETE FROM T1 OUTPUT deleted.id2 AS id INTO @CurIds(id) FROM #G AS G INNER JOIN #T1 AS T1 ON G.id = T1.id1 WHERE lvl = @lvl - 1; INSERT INTO #G(id, grp, lvl) SELECT DISTINCT id, @id1 AS grp, @lvl AS lvl FROM @CurIds AS C WHERE NOT EXISTS ( SELECT * FROM #G AS G WHERE G.id = C.id ); SET @added = @@ROWCOUNT; DELETE FROM @CurIds; DELETE FROM T1 OUTPUT deleted.id1 AS id INTO @CurIds(id) FROM #G AS G INNER JOIN #T1 AS T1 ON G.id = T1.id2 WHERE lvl = @lvl - 1; INSERT INTO #G(id, grp, lvl) SELECT DISTINCT id, @id1 AS grp, @lvl AS lvl FROM @CurIds AS C WHERE NOT EXISTS ( SELECT * FROM #G AS G WHERE G.id = C.id ); SET @added += @@ROWCOUNT; END; SELECT TOP (1) @id1 = id1, @id2 = id2 FROM #T1 ORDER BY id1, id2; SET @added = @@ROWCOUNT;END; SELECT id, grpFROM #G; DROP TABLE #G, #T1; Figure 11 compares the performance of solution 3 and solution 4, and shows how both scale with respect to the number of groups.

Figure 11 – Impact of number of groups on performance of solutions 3 and 4

We have a clear winner!

Summary

Graph-related tasks are often challenging since it’s so easy to develop solutions that don’t scale well. The task I covered in this article of grouping connected items is no different. Solution 1, which is based on a transitive closure and a table variable, performed very badly even with tiny amounts of data. Solution 2, which is based on a transitive closure and a temporary table, performed better, but still scaled exponentially. Solution 3 handled one group at a time, starting with the first pair and unfolding the connections in a manner similar to unfolding parent-child hierarchies. It scaled beautifully with respect to the size of the group, but didn’t scale well with respect to the number of groups due to the query finding the first pair in the group needing to scan and process increasing numbers of rows. Solution 4 adds a dimension to solution 3, where it works with a copy of the data in a temporary table, deleting all processed rows in each round, therefore scaling much better with respect to the number of groups. Figure 12 includes a graph summarizing the performance of the solutions. (Solution 1 doesn’t appear in the graph because it’s so slow.)

Figure 12 – Performance summary (logarithmic scale)

About the Author

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)