Our First Taste of ‘Apple Intelligence’ Is Here. Here’s What Works.Our First Taste of ‘Apple Intelligence’ Is Here. Here’s What Works.

Apple released an early preview of the AI tools in the iOS 18.1 software. Here‘s what’s been helpful so far.

August 1, 2024

.jpg?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

SAN FRANCISCO -- Apple is betting that phones with AI tools can make life a little easier – and now, we’re getting a first look at how.

This week, Apple released an early preview version of its iOS 18.1 software, containing the first of the Apple Intelligence tools it first highlighted at its AI-heavy developers conference in June. The full versions of these features aren’t expect to launch until after the company announces its new iPhones in the fall, but anyone who signs up for a free developer account can take these early tools for a spin right now.

Using incomplete, or beta software always comes with risks, but there’s another reason you should probably considering trying this update out for yourself. This first taste of Apple Intelligence is part of a developer beta, meaning it’s meant for app makers who need to make sure their work integrates nicely with Apple’s.

In other words, it’s not really meant for regular folks to use – but that didn’t stop us from seeing what Apple Intelligence can do right now.

But First, the Caveats

Just installing this new software doesn’t automatically grant you a pass to use Apple Intelligence – you’ll have to request access to a waitlist, so Apple can manage the load on its servers. For now, that process can take up to a few hours, but that could change over time.

In this early preview, some of the flashier features Apple has previously discussed aren’t ready to use yet. There’s no way to chat directly with ChatGPT, for instance, or to create custom Genmojis for your group chat. While Siri has picked up a few new tricks in this preview, it can’t react to what’s happening on your iPhone’s screen, or interact directly with your apps.

And whether you want to try Apple Intelligence now or wait for the full release later this year, you’ll still need some specific hardware. Right now, only the iPhone 15 Pro and Pro Max can use these AI tools.

Here are the Apple Intelligence features we’ve found useful so far.

Summaries Galore

Don’t have time to read through your boss’s amazingly long emails? Or that one news article you can feel your attention drifting away from halfway through? With a tap, your iPhone can sum them up for you.

Open a message in Apple’s Mail app, and you’ll see a button right at the top that offers to summarize everything for you. Finding the same button when you’re reading a website is a little different; you’ll have to open the page in the Safari browser’s Reader mode before the summarize option appears.

Some devices, like Samsung’s Galaxy S24 Ultra, offer a little flexibility by giving you the option to switch between brief and more detailed versions of webpage summaries. Not so in iOS, at least for now: Asking for a summary of an email or an article leaves you with a fairly terse breakdown.

As a journalist, I have admittedly mixed feelings about letting AI try to distill our work into blurbs – even if I find the summaries helpful at times. Still, since tools like this are only becoming more common, we’ll keep testing to see how well Apple does here.

Call Recording

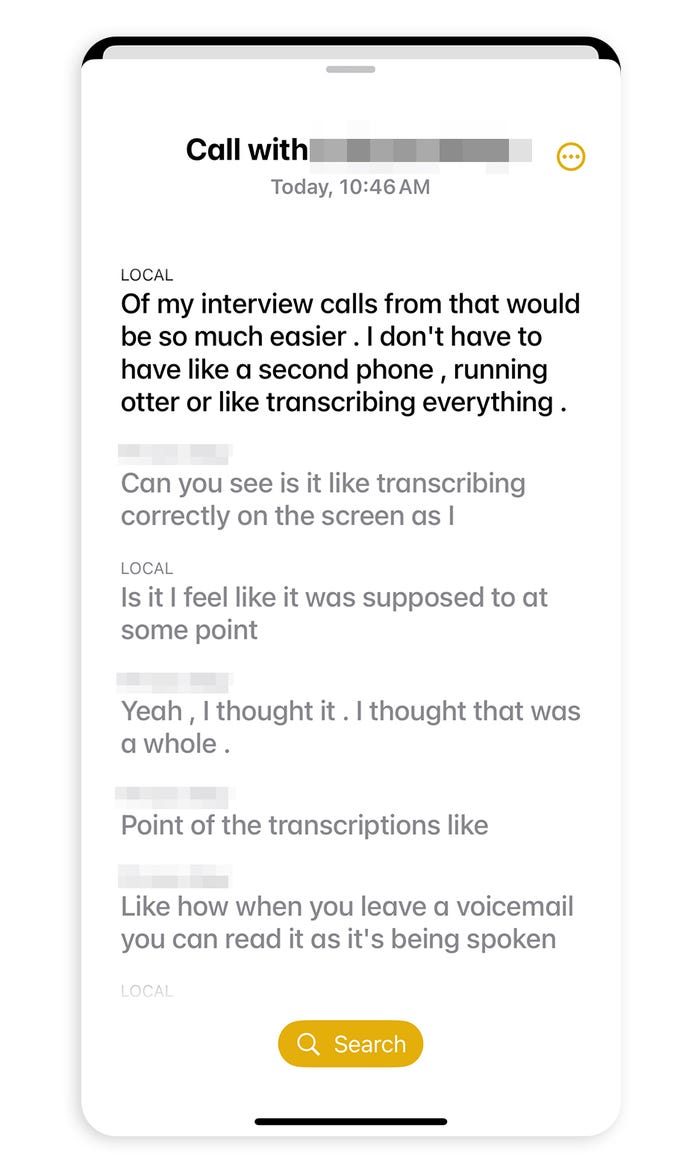

The next time you catch up with, say, your insurance agent, you may not have to reach for a pen and a scrap of paper to take notes. Instead, you can tap a button while you’re on a call to start recording both sides of the conversation; at the end, you’ll find a recording and transcription of the call inside the Notes app.

Oh, in case you were wondering, there’s no way to record a conversation without the other party knowing – a disembodied voice will chime in on the call to let everyone know what’s going on. (That said, if you use this feature, be a good person and get everyone’s permission before turning it on.)

A new feature from Apple Intelligence allows users to record calls and provide transcriptions of them. CREDIT: Chris Velazco/The Washington Post

As with webpages and emails, you can ask the iPhone to summarize that transcript with a touch. But if you need something more specific, it’s easy enough to directly search the contents of the transcript for specific words or phrases that came up to jog your memory.

Faster Photo Searching

Let’s be honest: Who among us hasn’t spent a little too much time trying to find that one photo we’ve been thinking of?

Apple Intelligence makes that process a little easier. When you open the search tool inside the iPhone’s Photos app, you’ll be able to punch in – and get results for – more specific requests.

My recent search for “Snow in New Jersey” using iOS 18.1, for example, pulled up the only four images I have of, well, snowy days in the Garden State. The same search on a device that wasn’t running Apple’s new preview software turned up those same photos, plus a few that didn’t fit the bill – like images from Christmas that happened to contain the word “snow” in them.

Siri as Tech Support

I spent years of my life covering and reviewing new smartphones – but even I forget how to use certain features at times.

Since I began living with the iOS 18.1 preview, I’ve started just asking Siri to help out.

Apple’s voice assistant is due for some big changes this year, many of which aren’t available to try out just yet. But one thing Siri has gotten better at is acting as a tech support rep that just lives on my phone.

Consider my photos, for example: I always forget how to hide certain sensitive images – like backup photos of my driver’s license or passport – so they don’t appear in the middle of my Photo library. Now, though, asking Siri prompts the assistant to display step-by-step instructions on-screen, so I don’t need to fire up a web browser and ask Google instead.

About the Author

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)