Examining Scalar Function Performance in SQL ServerExamining Scalar Function Performance in SQL Server

Each new release of SQL Server brings new DMOs. This article digs into dm_exec_function_stats, which made its debut in Microsoft SQL Server 2016.

Microsoft SQL Server provides a number of ways for data professionals to glean knowledge of the inner workings of the platform: From Dynamic Management Views (DMVs) and Functions (DMFs) to Extended Events, Catalog Views and an endless supply of third-party tools, you can find out pretty much anything about the internals of SQL Server if you know where to look.

I’ve written extensively about Dynamic Management Objects (DMOs), and each new release of SQL Server brings with it new DMOs. The one we’re focusing on today has been around since Microsoft SQL Server 2016: dm_exec_function_stats.

dm_exec_function_stats is similar in output to two other DMVs: dm_exec_procedure_stats and dm_exec_query_stats. Each provides metrics on performance for specific object types in cache: dm_exec_function_stats (user-defined functions), dm_exec_procedure_stats (non-system stored procedures) and dm_exec_query_stats (user generated queries).

dm_exec_function_stats provides more granular performance analysis than dm_exec_procedure_stats and dm_exec_query_stats. While procedure_stats and query_stats provide metadata around CPU, RAM, I/O and duration information around the complete execution of a stored procedure or query, function_stats does so for a user-defined function, which is never a stand-alone object. You must use a function inside of a statement. That statement by itself, or in concert with other T-SQL commands, will then be part of either a stored procedure or an ad-hoc query. This means that using dm_exec_function_stats allows us to drill into performance of a function. Using dm_exec_procedure_stats and dm_exec_query_stats includes all T-SQL that is part of those units (stored procedures or batches of ad-hoc t-sql), which may include one or more user-defined functions (UDFs).

As is the case with its cousins, dm_exec_function_stats has a significant number of output columns that cover the classes of key indicators you’d expect when performance tuning items in SQL Server:

CPU: min, max and last execution’s results

RAM: min, max and last execution’s results

Duration: min, max and last execution’s results

I/O: Logical and physical reads; physical writes: min, max and last execution’s results

Execution count

SQL Handle: uniquely identifying the T-SQL text that makes up the function’s definition

Plan Handle: identifies the query plan cached for the function

Identifying information such as database_id and object_id, type

The existence of sql_handle and plan_handle in this DMV allows you to join to other DMOs to return the defining code for the function and see the graphical execution plan. You can also use system functions like dm_name() and object_name() to make columns such as database_id and object_id more user-friendly when looking at results.

Take, for example, the following query to look at the state of user-defined scalar functions for a given instance/database sorted by duration. By default, this query returns results for the currently in-scope database through the WHERE clause I’ve coded:

SELECT TOP 25 db_name(eST.[dbid]) AS [database] , OBJECT_SCHEMA_NAME(eFS.[object_id], eFS.database_id) AS [schema_name] , OBJECT_NAME(eFS.[object_id], eFS.database_id) AS [function_name] , eFS.execution_count -- CPU , CAST(eFS.min_worker_time/1000.0 AS decimal(16,4)) AS [min_cpu_time_mcs] , CAST(eFS.max_worker_time/1000.0 AS decimal(16,4)) AS [max_cpu_time_mcs] , CAST(eFS.total_worker_time/(ISNULL(eFS.execution_count, 1.0)) AS decimal(16,4)) AS [avg_cpu_time_mcs] -- ELAPSED TIME , CAST( eFS.min_elapsed_time/1000.0 AS decimal(16,4)) AS [min_duration_mcs] , CAST(eFS.max_elapsed_time/1000.0 AS decimal(16,4)) AS [max_duration_mcs] , CAST(eFS.total_elapsed_time/(ISNULL(eFS.execution_count, 1.0)) AS decimal(16,4)) AS [avg_duration_mcs] -- LOGICAL READS , eFS.min_logical_reads AS [min_logical_reads] , eFS.max_logical_reads AS [max_logical_reads] , eFS.total_logical_reads/ISNULL(eFS.execution_count, 1) AS [avg_logical_reads] -- PHYSICAL READS , eFS.min_physical_reads AS [min_physical_reads] , eFS.max_physical_reads AS [max_physical_reads] , eFS.total_physical_reads/ISNULL(eFS.execution_count, 1) AS [avg_physical_reads] -- LOGICAL WRITES , eFS.min_logical_writes AS [min_writes] , eFS.max_logical_writes AS [max_writes] , eFS.total_logical_writes/ISNULL(eFS.execution_count, 1) AS [avg_writes] -- CACHE & EXEC TIMES , eFS.last_execution_time --STATEMENTS AND QUERY TEXT DETAILS , eST.text AS [procedure_code] , eFS.[plan_handle] FROM sys.dm_exec_function_stats AS eFS CROSS APPLY sys.dm_exec_sql_text(eFS.sql_handle) AS eST CROSS APPLY sys.dm_exec_query_plan (eFS.plan_handle) AS eQPWHERE eST.[dbid] = db_id()--ORDER BY eFS.total_logical_reads/ISNULL(eFS.execution_count, 1) DESC; -- [avg_logical_reads]--ORDER BY eFS.total_physical_reads/ISNULL(eFS.execution_count, 1) DESC; -- [avg_physical_reads]--ORDER BY eFS.total_logical_writes/ISNULL(eFS.execution_count, 1) DESC; -- [avg_logical_writes]ORDER BY eFS.total_worker_time/ISNULL(eFS.execution_count, 1) DESC; -- [avg_cpu]--ORDER BY eFS.total_elapsed_time/ISNULL(eFS.execution_count, 1) DESC; -- [avg_duration]

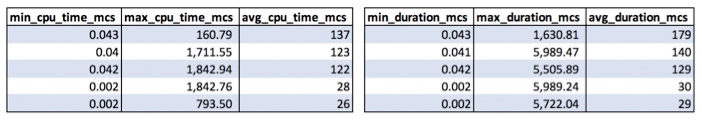

I’ve broken the results up here into logical “chunks” and also to make what is a wide result set easier to read:

The query is easily adjustable to focus on just those functions you’re interested in based on the options for sorting by duration, reads, writes, CPU, etc., depending on which line you choose to uncomment in the ORDER BY clause.

Another thing to consider when looking at the results that come from these DMOs is the unit of measure that is appropriate. You’re not likely to measure a garage in millimeters to see if your new car is going to fit. You’re likely going to use a unit of measure more appropriate for the job. The same goes with functions compared to procedures or ad-hoc query executions. Duration in the various columns provided by these objects is measured in microseconds--1/1,000,000 of a second. When I’ve written queries using dm_exec_procedure_stats and dm_exec_query_stats for analysis I’ve always reduced that unit of measure by a factor of 1,000 to measure duration as milliseconds--1/1000 of a second. This matches the default unit of measure for performance benchmarks for queries. Since functions are but a component of a larger query (be it in stored procedure or ad hoc form), I leave the unit of measure as microseconds.

In examining the results, the first thing that jumps out to me is the range of execution duration times between the average and the max values. I’d be really curious to dig into these results further to ascertain why performance is (possibly) inconsistent. I find this query valuable when attempting to identify functions that may warrant tuning attention. It's also valuable for zeroing in on performance improvements, where I’m seeing them through my analysis of stored procedures or ad-hoc sql where functions play a role. dm_exec_function_stats is another one of those tools to keep on hand when analyzing performance in your Microsoft SQL Server instances.

About the Author

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)