SQL Server 2005 Schema ScriptingSQL Server 2005 Schema Scripting

Use SQL Server’s built in tools to roll your own schema collector

January 23, 2007

Have you ever had the need to script database schema for archiving purposes? Or perhaps your boss has been bugging you to document your databases better to meet the requirements of the Sarbanes-Oxley (SOX) Act. The SQL Management Options (SMO) API in SQL Server 2005 makes it simple to script out tables, stored procedures, views, user-defined functions (UDFs), and other schema objects within your databases. This article describes a simple Windows console-mode utility called SchemaCollector, written in C# using the .NET Framework, that you can use to collect database schema. You specify a SQL Server instance to run the utility against as a command-line argument, and the schema collector program generates individual schema output files for tables, stored procedures, views, functions, and roles in appropriate subdirectories within a directory hierarchy. You can download the full source code above.

Why Script Your Own Schema Collector?

You might be wondering why you might want to write your own schema-collection script when you could choose to purchase a third-party tool to do the job. With many companies nowadays being asked to "do more with less," some database professionals might find that it's more cost-effective to use the powerful functionality that's built into SQL Server. In addition, when a problem arises or a change occurs in the environment, you can tweak your code and quickly get back on track.

Of course, although generating an individual file for each database object might be the most efficient method from a maintenance perspective, it would be burdensome to recreate a large database from these files. A typical production database—with, say, 500 tables—might generate thousands of individual files by the time the schema generator is through. So you wouldn't use the SchemaCollector utility for such a purpose. Instead, you would use this program for specific, limited purposes. For example, in my company, I've used the utility for tracking schema changes over time and publishing production schema for developers.

Tracking schema changes over time. In your company (like mine) you might be under pressure to carefully track database changes over time to comply with SOX and other regulatory initiatives. In a large company that has many groups working on various projects, pinpointing the exact date, time, and root cause of database problems can be difficult. You can use the SchemaCollector utility to check the schema output files into Visual SourceSafe (or an equivalent source-code repository system) to allow analysis of schema from a change perspective. Then, you can use diff tools and other features built into Visual SourceSafe to determine exactly when schema changes occurred, where schema changes occurred, and why things broke. The TABLES, PROCEDURES, VIEWS, FUNCTIONS, ROLES directory structure that the SchemaCollector utility uses lends itself nicely to source-code repository maintenance.

Publishing production schema for developers. Some companies have a policy that requires that programmers be completely locked out of production. In my company, the data we manage involves sensitive medical and personnel information, so we can't give developers the db_datareader permission. And because it's an administrative hassle in SQL Server 2000, we don't even try to give them rights to view schema. As a result, development is totally in the dark about what the production schema looks like.

Over time, as programmers "dirty up" their development environment, their schema gets out of sync with production. So programmers must frequently ask me to email the production definitions for a handful of tables or stored procedures so that they can bring their development environment back into sync with production. To solve this problem, I use the SchemaCollector utility to routinely capture our production schema across all servers and all databases and publish the schema to a central directory share. I created an Intranet Web viewer application that lets developers view the production schema on any production server or database at will. I can run the SchemaCollector in an ad-hoc fashion from the command line to collect schema from all databases on one SQL Server instance, or I can schedule the utility to run periodically (e.g., under the Windows Task Scheduler) and collect schema for multiple systems weekly or monthly.

Let's Get Started

The C# source-code files for the SchemaCollector utility are in the downloadable .zip file at InstantDoc ID 94510. The Program.cs

file contains the main application code, App. Config is the application configuration file, and WriteLog.cs contains a C# class that's used for logging data to a text file. First, to make calls to SMO API functions, we need to include the following directive at the top of our file:

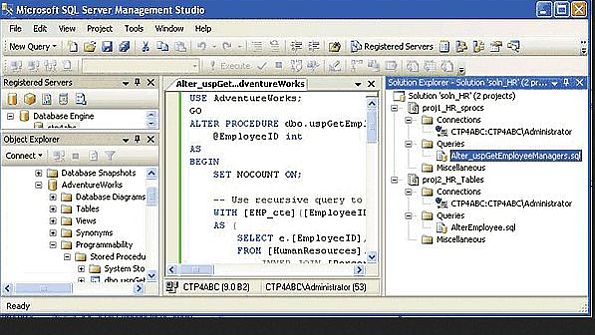

using Microsoft.SqlServer. Management.Smo;Next, we need to add some references in our .NET project so that the SMO DLLs can be included in the Visual Studio project. Before we can write code against an external component (e.g., a .NET Framework component, a COM component, an assembly or class library, an XML Web Service), our project first needs to contain a reference to that component, as Figure 1 shows. To add a reference in your project, from the main Visual Studio menu, select Project, Add Reference. Alternatively, you can right-click the name of the project in the Solution Explorer and select Add Reference. You'll see a dialog box that looks like the one in Figure 2.

For the purposes of this project, you need three SMO references: Microsoft.SqlServer. ConnectionInfo, Microsoft.SqlServer.Smo, and Microsoft.SqlServer.SmoEnum. The Microsoft.SqlServer.ConnectionInfo DLL contains some methods for parsing connection strings and accessing properties of connections. The Microsoft.SqlServer.Smo DLL contains the real guts of SMO and includes instance and utility classes that let users manipulate SQL Server programmatically. The Microsoft.SqlServer.SmoEnum DLL contains some classes that make it easy to enumerate (or "walk through") all SMO objects, as you'd do with a collection. These DLL files reside in the Microsoft Visual Studio path, typically in the C:Program FilesMicrosoft SQL Server90SDKAssemblies folder.

You'll also want to add a reference to the System.configuration class, which lets the program read configuration information from the App.config file. The App.Config configuration file is a good place to store entities that can change over time, such as database connection strings. In our case, we use the configuration file to maintain the base path to the location where schema files will be on the file system.

The program code first reads the name of the SQL Server from the command line, and attempts to establish a connection to that SQL Server instance. We attempt to create an instance of the SMO Server class by using the following logic:

// Create an Instance of the // Server class.Server theServer = new Server(str DBMSInstanceName);If the Server instantiation can't be established, the code takes an exception path and writes an error message to the screen. If the Server entity is successfully created (and the connection is successful), we attempt to retrieve the version of SQL Server. (This isn't absolutely necessary, but we do it to ensure that we really can communicate with the instance through SMO.)

Once we have a Server instance, we can begin to explore the Databases collection. The most important line in the code in this section is:

foreach (Database db in theServer.Databases){}This statement lets us iterate through all databases in the SMO Databases collection to find the SQL Server instance we want to document. When you look at the source code, you'll see that it deliberately avoids scripting information for the SQL Server 2000 pubs and Northwind sample databases because we don't need them for this example and will waste disk space. You might also want to exclude the SQL Server 2005 AdventureWorks sample database and some of the system databases on production systems. However, for development systems, you'll probably want to leave pubs, Northwind, and AdventureWorks in your schema output.

For each database it encounters, the code scripts out the tables, functions, views, stored procedures, and roles in that database. The logic to script individual entities is similar in each case. The code first creates a scripter object:

// Define a Scripter object and// set the required scripting// options.Scripter scrp = new Scripter(theServer);Then, the code sets the appropriate options for that scripter object. For example, when scripting tables, we can include indexes and triggers in the output by setting the properties of the scripting options like this:

// Set Scripting Options.scrp.Options.Indexes = true;scrp.Options.Triggers = true;Once the options are set, the code uses a foreach statement to navigate through the respective SMO entity collection for the database and scripts out the schema entities as text. The style of programming is similar regardless of whether we're scripting tables, views, or stored procedures. For example, to move through the collection of tables in a particular database, you'd use the following code:

// Iterate through Tables // Collection. foreach (Table t in db.Tables){ }For iterating through the views collection, the code looks like this:

// Iterate through Views // Collection. foreach (View v in db.Views){ }And for stored procedures, the code looks like this:

// Iterate through Stored // Procedures Collection.foreach (StoredProcedure sp in db.StoredProcedures) { }Note that when you're documenting database schema, it's important to include constraints. In the SchemaCollector program the line

scrp.Options.DriAll = true;puts in all the DRI constraints. SMO is quite flexible, so you have lots of options for including and excluding various types of constraints. For more information about how to include constraints in your schema collection script, see the topic "ScriptingOptions Members" in SQL Server 2005 Books Online (BOL). You'll need to experiment within your own environment to come up with the combination of scripting attributes that you want to include in your version of the script. In Web Figure 1, you can see an example of the output I got with the table scripting options I've specified in the SchemaCollector code for this article. Also note that some filegroup information is included by default when the SchemaCollector program is run as written. (You'll notice the ON [PRIMARY] attributes that are included in the output that Web Figure 1 shows.) But full database filegroup information isn't exposed at this level. However, the "Microsoft.SqlServer. Management.Smo Namespace" topic in SQL Server 2005 BOL explains that the FileGroup and FileGroupCollection classes are exposed within that namespace. By using the Microsoft.SqlServer.Management.Smo namespace, you could, for example, query the Files property of the FileGroup member to get a list of all the data files belonging to a file group.

Getting Results

The output of the SchemaCollector program is shown in Figure 3. Each schema entity (e.g., table, view, procedure) gets scripted to its own individual file.

This individual scripting makes it easy to archive all schema entities in a source-code repository such as Visual SourceSafe. We manufacture the appropriate script filename based on the entity name, appending the .sql suffix so that we know it's a script file. For example, for tables we use:

// Set the Script // File Name. scrp.Options. FileName =strScriptFileDir +@"" + strTableName+ ".SQL";To do the actual scripting, we need to reference a Uniform Resource Name (URN). This is a special address (or handle) that uniquely identifies SQL Server objects. The SMO coding looks like this:

// Script Out This// Table.Urn[] urn = newUrn[1]; urn[0] = t.Urn;scrp.Script(urn);When the program finishes running, the schema output will be in a directory hierarchy under the path specified by the zBASEPATH identifier in the App. Config file. By default, this path is set to C:SCHEMA.

A Final Warning

I need to give you a general warning about the schema collection process using SMO: The scripting process is not always fast. For databases with hundreds or thousands of tables and stored procedures in them, the utility might take several hours to script out all the schema entities. For this reason, you might want to schedule the schema collection program to run unattended. The program writes its output to a log file (in addition to the screen), and you can use the log file to assess progress and watch for any errors that may arise. I recommend using the screen output to monitor progress visually, but the screen output is optional and does slow the schema scripting process a bit. So if you don't want to see the screen output, you can simply comment out the WriteStatus() function calls in the C# code.

Finally, note that although I haven't done so in the program, you might gain some improved program speed if you use the Server.SetDefaultInitFields() method when you first set the Server entity. Although the details are too involved for me to include here, by default SMO doesn't retrieve every property in its list of entities to be gathered, for performance reasons. You can specify which properties are included to prevent needless round-trips to the server.

When you use the SchemaCollector program in your own environment, you can run the program as needed or schedule it to run periodically. In addition, you can easily extend the program logic to handle multiple SQL instances with a batch file or similar mechanism. Have fun using this utility to create your own personalized schema collector.

About the Author

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)