Shared-Nothing VM Live Migration with Windows Server 2012 Hyper-V

Enjoy the benefits of consolidation—without dealing with downtime

July 20, 2012

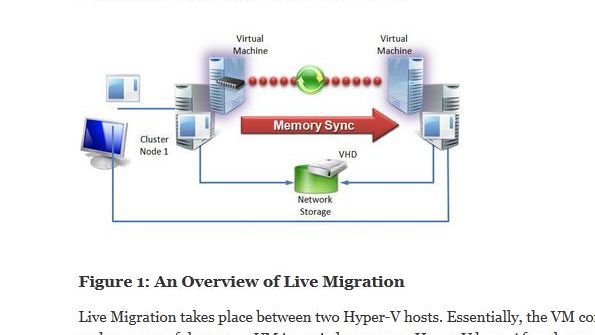

In most aspects of computing, the goal is to consolidate, share resources, and function as a single unit. This consolidation is a key focus of virtualization. It was also a major priority for Windows Server 2008 R2 Hyper-V, which introduced the ability to share SAN-hosted NTFS volumes between all the nodes in a cluster. This approach gives all the volumes simultaneous access through the Cluster Shared Volumes (CSV) feature. Server 2008 R2 also introduced live migration, which lets you move a virtual machine (VM) between nodes in a cluster without downtime. Live migration does this by copying the memory and device state of the VM while it's running. The ability to move VMs with no downtime put Hyper-V on par with other hypervisors and gave organizations greater flexibility in placement and optimization of resources. For many organizations that use Hyper-V, live migration has become so important that one of the criteria for Windows Server 2012 was that no feature could be added if it would break the live migration capability.

New Capabilities

Server 2012 Hyper-V features numerous major new capabilities, including some that involve failover clustering. Two key changes relate to live migration within a failover cluster:

Live migration supports multiple concurrent migrations between pairs of hosts in Server 2012, instead of just one.

Failover clustering supports 64 nodes and 4,000 VMs in one Server 2012 failover cluster -- a 400 percent increase over Server 2008 R2 failover clusters. This is not what I want to talk about in this article, though.

Server 2012 introduces a new type of live migration: a shared-nothing live migration, and I mean shared-nothing. No shared storage, no shared cluster membership -- all you need is a Gigabit Ethernet connection between the Server 2012 Hyper-V hosts. With this network connection, you can move a VM between Hyper-V hosts, including moving the VM's virtual hard disks (VHDs), memory content, processor, and device state with no downtime to the VM. In the most extreme scenario, a VM running on a laptop with VHDs on the local hard disk can be moved to another laptop that's connected by a single Gigabit Ethernet network cable.

A word of caution: Do not think that shared-nothing live migration means that failover clustering is no longer necessary. Failover clustering provides a high availability solution, whereas shared-nothing live migration is a mobility solution that gives you new flexibility in the planned movement of VMs between Hyper-V hosts in your environment. As such, live migration can supplement failover clustering. Think of being able to move VMs into, out of, and between clusters and between standalone hosts without downtime. Any storage dependencies are removed with shared-nothing live migration.

Requirements for Shared-Nothing Live Migration

The requirements for enabling shared-nothing live migration are fairly simple:

You need two (at a minimum) Server 2012 installations with the Hyper-V role enabled or the free Microsoft Hyper-V Server 2012 OS.

Each server must have access to its own location to store VMs. This location can be local or SAN-attached storage or a Server Message Block (SMB) 3.0 share.

Servers must have the same type or family of processor (i.e., Intel or AMD) if you're using the VM's Processor Compatibility feature.

Servers must be part of the same Active Directory (AD) domain.

Servers must be connected by at least a 1Gbps connection (a separate private network for live migration traffic is recommended but not necessary), over which the two servers can communicate. The network adapter that you use must have both the Client for Microsoft Networks and the File and Printer Sharing for Microsoft Networks options enabled, as these services are used for any storage migration.

Each Hyper-V server should have the same virtual switches defined with the same name, to avoid errors and manual steps when performing the migration. If a virtual switch isn't defined on a target Hyper-V server that has the same name as the switch that's used in the VM configuration that's being migrated, then an error will be displayed and the administrator performing the migration will need to select which switch on the target Hyper-V server the VM's network adapter should connect to.

VMs that are being migrated must not use pass-through storage.

Provided that you meet these requirements, the next step is to enable the Hyper-V hosts for incoming and outgoing live migrations.

Enabling the Hosts

To enable Hyper-V hosts for live migrations, select the Enable incoming and outgoing live migrations check box within the Hyper-V Settings for the host; these settings are available through Hyper-V Manager. Figure 1 shows the basic settings to enable live migration outside of a cluster environment.

In the simplest environments, selecting the Enable incoming and outgoing live migrations option, accepting the default Use Credential Security Support Provider (CredSSP) setting for authentication, and using any available network for live migration should enable shared-nothing live migrations. Behind the scenes, a firewall exception for TCP port 6600 is enabled through the built-in exception Hyper-V (MIG-TCP-In). If you have a different local firewall on your servers or have firewalls between servers, then you must manually allow this port.

Note that in the Hyper-V Settings, you can also set the maximum number of simultaneous live migrations. Server 2012 removes the single simultaneous live migration limit between any two hosts and instead limits the number of simultaneous live migrations according to available network bandwidth, to give the optimal live migration experience. However, if you want to limit the number of simultaneous live migrations to a specific number, then set that limit in the Simultaneous live migrations field. You can also configure this setting by using the MaximumVirtualMachineMigrations parameter of the Set-VMHost Windows PowerShell cmdlet.

Although the default settings might work in simple environments or for a basic test, most environments will want to switch to Kerberos for authentication and will want to use a specific network for live migration traffic, which will include both a copy of the VM memory and its storage. Using Kerberos allows administrators to initiate live migrations remotely; using a specific network helps to manage network traffic and to ensure that the required network bandwidth is available for live migrations. Let's look at authentication first and why it's a challenge for live migration in a non-clustered environment.

Authentication for Live Migration

In a cluster environment in which all Hyper-V hosts are part of a failover cluster, all the Hyper-V hosts share a common cluster account. This account is used for communication between the hosts for authentication, simplifying (from an authentication perspective) operations such as migrations within a cluster. Outside of a cluster, each Hyper-V host has its own computer account, without a shared credential; when operations are performed, the user account of the user who is performing the action is typically used for authentication.

With a live migration, actions are taken on the source and target Hyper-V servers (and on file servers, if the VM is stored on an SMB share), both of which require the actions to be authenticated. If the administrator who is performing the live migration is logged on to the source or target Hyper-V server and initiates a shared-nothing live migration from the local Hyper-V Manager, then that administrator's credentials can be used both locally and to run commands on the target Hyper-V server. In this scenario, CredSSP works fine, allowing the administrator's credentials to be used on the remote server from the client -- basically a single authentication hop from the local machine that is performing the action to a remote server.

However, the whole goal for Server 2012 (and management in general) is remote management and automation. Having to actually log on to the source or target Hyper-V server each time you require a live migration outside of a cluster is a huge inconvenience for remote management. If a user was logged on to the local computer running Hyper-V Manager and tried to initiate a live migration between Hyper-V hosts A and B, that attempt would fail. The user's credentials would be used on Hyper-V host A (which is one hop from the client machine), but Hyper-V host A would be unable to use those credentials on Host B to complete the live migration. The problem is that CredSSP doesn't allow credentials to be passed to a system that is more than one hop away. This is where the option to use Kerberos enables full remote management: Kerberos supports constrained delegation of authentication. Therefore, when a user performs an action on a remote server, that remote server can use the user's credentials for authentication on a second remote server.

Does this mean that a server to which I connect remotely can just take my credentials and use them on another server without my knowledge? This is where the constrained part of constrained delegation comes into play, although you'll need to perform some setup before you can use Kerberos as the authentication protocol used for live migration. You need to configure delegation for each computer account that will be allowed to perform actions on another server on behalf of users. To configure this delegation, use the Active Directory Users and Computer management tool and the computer account properties of the server that will be allowed to delegate. As Figure 2 shows, the Delegation tab contains settings for the allowed level of delegation. For most computers, the configuration that this figure shows -- allowing delegation only for specific services and only for the Kerberos protocol -- is optimal.

The only service that requires delegation is the Microsoft Virtual System Migration Service, which should be enabled for the target Hyper-V server. You must set authentication to Use Kerberos only. My two Hyper-V servers are SERVERA and SERVERB; the figure shows that I am modifying the delegation properties for SERVERA and configuring Kerberos delegation for the Microsoft Virtual System Migration Service to my other server, SERVERB. I'll repeat this configuration on the SERVERB computer account, allowing it to delegate to SERVERA. Also note that I have delegation set for the Common Internet File System (CIFS) service, which is required later when VMs that are hosted on SMB file shares are migrated between hosts. After I've configured Kerberos delegation, live migration can be initiated between trusted hosts from any remote Hyper-V Manager instance.

Remember that all the hosts that participate in the live migration must have the same authentication configuration. Figure 3 and Figure 4 summarize the difference between CredSSP and Kerberos and the configurations that are required for each. Figure 3 illustrates the use of CredSSP, which requires the live migration to be initiated from one of the Hyper-V hosts.

Figure 4 illustrates the use of Kerberos authentication and remote initiation, which requires the additional AD Kerberos constrained delegation. Although more work is involved in the use of Kerberos authentication, the additional flexibility makes the work worthwhile and definitely recommended. To configure the authentication type from PowerShell use the Set-VMHost cmdlet and set the VirtualMachineMigrationAuthenticationType to either CredSSP or Kerberos.

Network Settings

Authentication was the difficult part. Next, you must set the network to use incoming live migrations (i.e., the network on which the host will listen and accept live migrations). By default, live migration is accepted from any network. However, I recommend that you use a private, live migration–specific network whenever possible, to ensure that bandwidth is available and separate from other network traffic. You can add and order multiple networks: Simply enter the appropriate IP subnet, using the network prefix notation, also known as the Classless Inter-Domain Routing (CIDR) notation. For example, to specify my network adapter with IP address 10.1.2.1 and subnet 255.255.255.0, I'd use the notation 10.1.2.0/24. An alternative is to specify the full IP address with a subnet of 32 (e.g., 10.1.2.2/32), removing any ambiguity but requiring the configuration to be changed any time the IP address changes. Make sure that the source and target IP servers can communicate with each other by using the IP addresses that you specified for use with live migration, or the live migration will fail. To configure these settings by using PowerShell, use the Add-VMMigrationNetwork and Set-VMMigrationNetwork cmdlets.

Using Shared-Nothing Live Migration

After you configure the Hyper-V hosts, the actual migration is simple. Select the Move action for a VM, and then select the Move the virtual machine option as the move type. Enter the name of the destination Hyper-V server to which you want to move the VM, and finally choose how the VM's assets, such as VHDs, are moved to the destination. Figure 5 shows the final move option. Because we’re focusing on the shared-nothing scenario, which means no shared storage and no use of SMB file shares, we need to select one of the first two options: Move the virtual machine's data to a single location or Move the virtual machine's data by selecting where to move the items. The first option allows you to specify a single location on the target that will store the VM configuration, hard disks, and snapshots. The second option allows you to specify a location for each VM item in addition to selecting which items should be moved.

After you make your choice, select a folder on the destination server. The move operation will start; the time needed to finish is based on the size of the VHDs and the memory that you're moving and the rate of change. However, the move will be completed without any downtime or loss of connectivity to the VM, as you can see in the accompanying video. You can also initiate the move by using the Move-VM PowerShell cmdlet.

Troubleshooting Live Migration

The following steps should help you troubleshoot any hiccups that you might experience:

First, make sure that you have adhered to the requirements that I listed at the start of this article.

Check the Event Viewer (Applications and Services Logs > Microsoft > Windows > Hyper-V-VMMW > Admin) for detailed messages.

Make sure that the IP configuration between the source and target is correct. The servers must be able to communicate. Try pinging the target live-migration IP address from the source server.

Run the following PowerShell command in an elevated session, to show the IP addresses that are being used for a server and the order in which they are used:

gwmi -n rootvirtualizationv2 Msvm_VirtualSystemMigrationService | select MigrationServiceListenerIPAddressListMake sure that the Hyper-V (MIG-TCP-In) firewall exception is enabled on the target.

The target server must be resolvable by DNS. Try running Nslookup on the target server. Also run

ipconfig /registerdnson the target server and

ipconfig /flushdnson the source server.

On the source server, use the following command to flush the Address Resolution Protocol (ARP) cache:

command arp -d *To test connectivity, try a remote Windows Management Instrumentation (WMI) command to the target (the WMI-In firewall exception must be enabled on the target); for example

gwmi -computer -n rootvirtualizationv2 Msvm_VirtualSystemMigrationServiceChange the IP address that is used for live migration. For example, if you're using 10.1.2.0/24, try changing to the specific IP address 10.1.2.1/32. Also check any IPSec configurations or firewalls between the source and target. Check for multiple NICs on the same subnet, which could cause problems; try disabling one if you find any.

Set authentication to CredSSP and initiate locally from a Hyper-V server. If this solves the issue, then the problem is the Kerberos delegation.

The most common problems that I've seen are a misconfiguration of Kerberos or the IP configuration. Failing to resolve the target server via DNS will also cause problems.

Closing Thoughts and Next Steps

Shared-nothing live migration is the most extreme type of zero-downtime migration. However, there are other types of zero-downtime migration, such as storing VMs on an SMB file share that both Hyper-V hosts can access. This approach transfers memory and device state over the network, without moving the storage. And there is still live migration within a failover cluster, which can use shared SAN-based storage through the CSV file system (CSVFS).

If you're moving a VM between failover clusters or into or out of a failover cluster from a standalone Hyper-V host, you'll need to remove the VM from the cluster before migrating the VM. The good news is that with Server 2012, you can add and remove a VM from a failover cluster without needing to stop the VM -- meaning no downtime to the VM, even when migration with a failover cluster is involved.

Of course, even in a shared-nothing scenario, there is still a shared physical network fabric and a dependence on the VM IP configuration during the move. This is where another Server 2012 feature, Network Virtualization, can open up a world in which VMs can be moved between any hosts in different locations without changing the networking configuration of the VM OS.

About the Author

You May Also Like

.png?width=100&auto=webp&quality=80&disable=upscale)

.png?width=400&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)