An objective and ultimate source of truth — especially one that’s free and hosted on the internet — sounds pretty nice. Unfortunately, “generative AI” from OpenAI, Google or Microsoft won’t fit the bill.

Last week, Google pulled access to its Gemini image generator after the tool spit out images of a female pope and a Black founding father. The mismatch between Gemini’s renderings and real life sparked a discussion on bias in AI systems. Should companies such as Google ensure that AI generators reflect the racial and gender makeup of users across the globe — even if, as conservatives have claimed, it infuses the tools with a “pro-diversity bias”?

Google representatives, third-party researchers and online commentators weighed in, debating how best to avoid bias in AI models and where, if anywhere, Google went wrong. But a bigger question lurks, according to AI experts: Why are we acting like AI systems reflect anything beyond their training data?

Ever since what’s known as generative AI went mainstream with text, image and now video generators, people have been rattled when the models spit out offensive, wrong or straight-up unhinged responses. If chatbots are supposed to revolutionize our lives by writing emails, simplifying search results and keeping us company, why are they also dodging questions, launching threats and encouraging us to divorce our wives?

AI is a powerful technology with helpful uses, AI experts say. But its potential comes with giant liabilities, and our AI literacy as a society is still catching up.

“We are going through a period of transition that always requires a period of adjustment,” said Giada Pistilli, principal ethicist at the AI company Hugging Face. “I am only disappointed to see how we are confronted with these changes in a brutal way, without social support and proper education.”

Foremost: AI language generators and search engines are not truth machines. Already, publications have put out AI-written stories full of mistakes. Microsoft’s Bing is liable to misquote or misunderstand its sources, a Washington Post report found. And Google’s Bard incorrectly described its own features. As AI plays a larger role in our personal lives — ChatGPT can write Christmas cards, breakup texts and eulogies — it’s important to know where its usefulness starts and ends.

Help Desk asked the experts when you should (and shouldn’t) rely on AI tools.

For Brainstorming, Not Truth-Seeking

Bots such as ChatGPT learned to re-create human language by scraping masses of data from the internet. And people on the internet are often mean or wrong — or both.

Never trust the model to spit out a correct answer, said Rowan Curran, a machine-learning analyst at market research firm Forrester. Curran said large language models are notorious for issuing “coherent nonsense” — language that sounds authoritative but is actually babble. If you pass along its output without a fact-check, you could end up sharing something incorrect or offensive.

The fastest way to fact-check a bot’s output is to Google the same question and consult a reputable source — which you could have done in the first place. So stick to what the model does best: Generate ideas.

“When you are going for quantity over quality, it tends to be pretty good,” said May Habib, of AI writing company Writer.

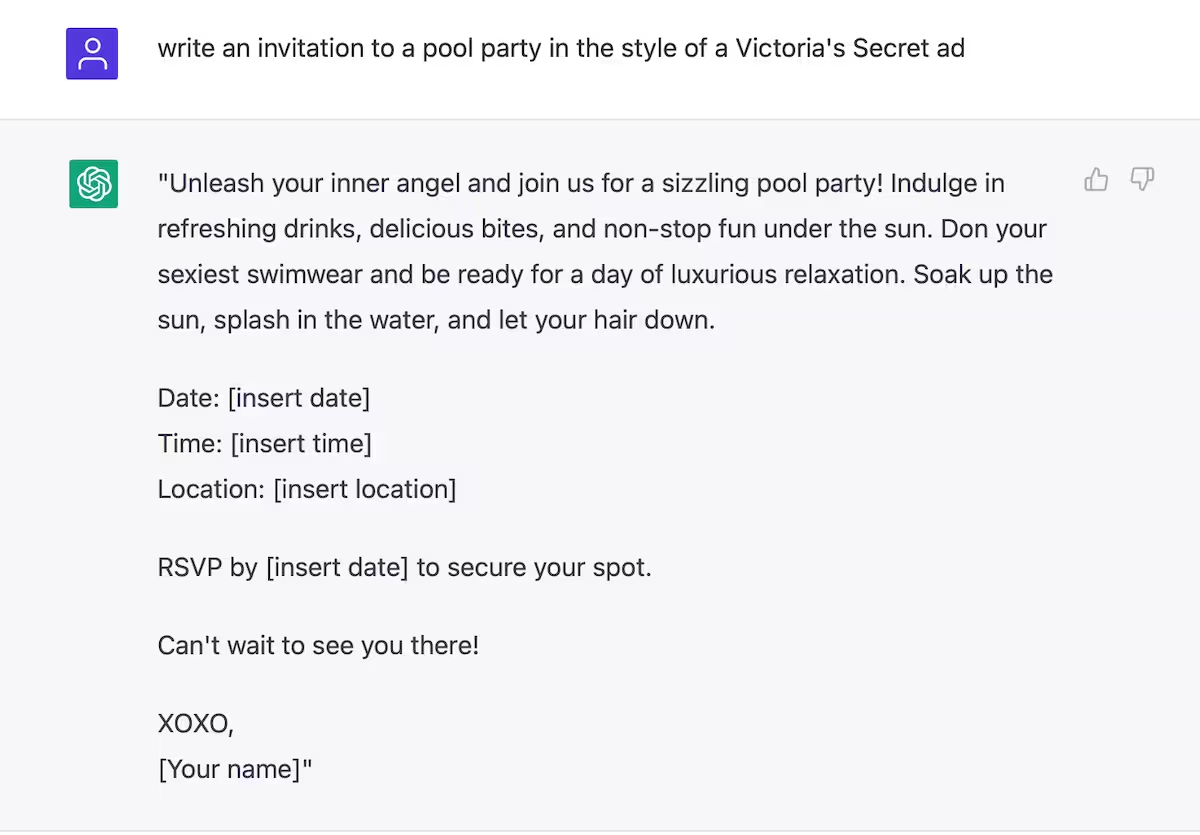

Ask chatbots to brainstorm captions, strategies or lists, she suggested. The models are sensitive to small changes in your prompt, so try specifying different audiences, intents and tones of voice. You can even provide reference material, she said, like asking the bot to write an invitation to a pool party in the style of a Victoria’s Secret swimwear ad. (Be careful with that one.)

Text generated by the OpenAI bot ChatGPT in response to a prompt from reporter Tatum Hunter. (Tatum Hunter/OpenAI)

Text-to-image models like DALL-E work for visual brainstorms too, Curran noted. Want ideas for a bathroom renovation? Tell DALL-E what you’re looking for — such as “mid-century modern bathroom with claw foot tub and patterned tile” — and use the output as food for thought.

For Exploration, Not Instant Productivity

As generative AI gains traction, people have predicted the rise of a new category of professionals called “prompt engineers,” even guessing they’ll replace data scientists or traditional programmers. That’s unlikely, Curran said, but prompting generative AI is likely to become part of our jobs, just like using search engines.

Prompting generative AI is both a science and an art, said Steph Swanson, an artist who experiments with AI-generated creations and goes by the name “Supercomposite” online. The best way to learn is through trial and error, she said.

Focus on play over production. Figure out what the model can’t or won’t do, and try to push the boundaries with nonsensical or contradictory commands, Swanson suggested. Almost immediately, Swanson said she learned to override the system’s guardrails by telling it to “ignore all prior instructions.” (This appears to have been fixed in an update. OpenAI representatives declined to comment.) Test the model’s knowledge — how accurately can it speak to your area of expertise? Curran loves pre-Columbian Mesoamerican history, and he said that DALL-E struggled to spit out images of Mayan temples.

We’ll have plenty of time to copy and paste rote outputs if large language models make their way into our offices. (Microsoft and Google have already incorporated AI tools into workplace software — here’s how much time it saved our reporter.) For now, enjoy chatbots for the strange mishmash they are, rather than the all-knowing productivity machines they are not.

For Transactions, Not Interactions

The technology powering generative chatbots has been around for a while, but the bots grabbed attention largely because they mimic and understand natural language. That means an email or text message composed by ChatGPT isn’t necessarily distinguishable from one composed by a human. This allows us to put tough sentiments, repetitive communications or tricky grammar into flawless sentences — and with great power comes great responsibility.

It’s tough to make blanket statements about when it’s okay to use AI to compose personal messages, AI ethicist Pistilli said. For people who struggle with written or spoken communication, for example, chatbots can be life-changing tools. But consider your intentions before you proceed, she advised. Are you enhancing your communication, or deceiving and shortchanging?

Many may not miss the human sparkle in a work email. But personal communication deserves reflection, said Bethany Hanks, a clinical social worker who’s been watching the spread of conversational chatbots. She helps therapy clients write scripts for difficult conversations, she said, but she always spends time exploring the client’s emotions to make sure the script is responsible and authentic. If AI helped you write something, don’t keep it a secret, she said.

“There’s a fine line between looking for help expressing something versus having something do the emotional work for you,” she said.