There are a significant number of options for database developers and administrators to tune instances of SQL Server databases when the inevitable performance issues surface. It may sound counterintuitive, but it makes sense to check whether you have too many indexes in use. Read on to find out why. (And click here to learn how to deal with SQL Server 2008 and SQL Server 2008 R2 end of life.)

People tend to have their go-to approaches to tuning systems--usually associated with their core strengths. Developers tend to want to tune the code they work with: application developers within the application stack; database developers with T-SQL and stored procedure code. Meanwhile, database administrators typically focus on T-SQL code, stored procedures and the “big picture” wins: schema changes and normalization, adding indexes, utilizing proprietary tools and features of the edition of SQL Server they’re addressing (such as Resource Governor, In-Memory OLTP, etc.), and, like their database developer peers, T-SQL code. Finally there are the infrastructure engineers (and, inevitably, leadership at levels abstracted from the day-to-day tactical efforts) who may suggest “throwing hardware at the problem” by scaling memory, CPU or faster storage if available through cloud service providers.

But There Are Limits

These options are limited, though, when you’re working with a comprehensive solution that is already in use by the general public. Making code changes is difficult, and, if properly tested and released, code changes are not quick solutions when dealing with performance issues that need correcting right now.

While the option does usually exist to “throw hardware” at the problem--particularly when you can auto-scale in the cloud--you eventually reach a point at which that’s no longer a viable option. Even though Microsoft would love if it was the case, not every deployment of SQL Server is on Enterprise Edition. Many of those options that exist to quell production performance degradation don’t exist in Standard Edition. Perhaps the decision to deploy on Standard Edition was based on cost, but, frequently, it’s done because those enterprise-class features aren’t needed when the product is being planned or released. There are plenty of decisions and situations that arise that can paint you into a corner when it comes to options on tuning in crisis situations.

Time for the DBA to Shine

When you can’t quickly change code, you can’t rely on Enterprise Edition features, and you can't upscale your hardware, the burden tends to fall onto the DBA.

The DBA's first step should be to look to server-level or database-level options to ensure they’re set correctly. For example, check parallelism settings, the amount of RAM allocated to SQL compared to the remaining services running on the server, whether the amount of memory necessary for the operating system to perform in a healthy fashion is in place, NUMA settings, and so on. If anything isn't set correctly, resolving the situatuon leads to quick wins.

If that doesn't work, it’s time to turn to optimizations based on a specific problem.

What to Do When Everything Is Bad

There is one thing I look at immediately (after configuration settings) that tends to yield benefits for issues including:

- High CPU

- Excessive blocking

- High numbers of key lookups

- IO latency

- General “my database is slow” issues

That thing I look at is index usage, but not necessarily in the way you expect. Many DBAs look at adding indexes as a panacea for all performance woes--particularly at the query/stored procedure level. I look at the opposite: Do we have too many indexes? It's possible, a fact that shocks even established senior DBAs. Many people argue that indexes help speed things up. Yes, that's true, but they also come with a secret cost: They need to be maintained. I don’t mean index rebuilds and reorganizations; what I’m referring to is the overhead of keeping those indexes updated.

You see, every time you add a record to a table with an index on it, there needs to be an operation to not only update the table itself, but also update any and all indexes that may be affected by that insert. This need to maintain all affected indexes each time a record is added is also necessary for any other operation that changes the value of a column or columns and their associated indexes. And don’t forget about those delete operations--the same goes for them, too. Each one of those operations is considered an index “write.”

Before you get the idea that I’m against indexes, let’s talk about the benefits they provide: They make certain things happen faster--typically, much faster. Indexes exist to serve up data quickly when applied properly. Beneficial indexes are those that are read from frequently, but rarely written to (updated), or are read to significantly more than they are updated. Indexes that are seldom--if ever--used to satisfy reads but are on highly volatile columns enduring frequent inserts, updates or deletes are poor choices for indexing that may actually be causing much more harm than good.

Let’s say you have a table created for appointments that has an index on appointment_date. It’s probably going to see a fair amount of both reads and writes, but, typically, the appointment date won’t change so at the record level. It’s not all that volatile, even though at the table level you’re seeing a lot of writes to that index because you’re running a successful company that books a lot of appointments. There are plenty of situations that appointment_date is going to be searched on, resulting in typically far more than a single read.

Conversely, an index on the date_modified column for that table, specifically created to allow for incremental loading of your data warehouse, is also going to get updated every time a new row is created in the table, as well as each time the record is changed. Odds are, though, you’re only loading your data warehouse and making use of the index once per day or even less frequently. For every action other than data warehouse loading, that index will not be beneficial. However, it will be extremely beneficial for loading records modified for a specific range--a critical need for incremental data warehouse loading. This is going to be the case for any index that is only occasionally beneficial.

Fortunately, we have ways of seeing how frequently indexes are--and are not--being used. I wrote about this very thing a while back, and the scripts still hold up. I invite you to read Top Insights You Can Gain from the Usage Stats Dynamic Management View Part 1 and Part 2 to get an understanding of the scripts used to identify unused indexes.

Real World, Real Benefits

I was recently involved in a client engagement at which the client had painted itself into just the kind of corner I described earlier. For years, the company had scaled up its hardware when it encountered database performance issues with the Standard Edition of SQL Server, until it hit a point where there was no room to go. They could not add any more CPU because of the Standard Edition's limitation of 24 physical cores. The client had far exceeded the amount of RAM it could utilize. Upgrading to the Enterprise Edition of SQL Server would have been expensive solution and would have required downtime the client couldn’t afford.

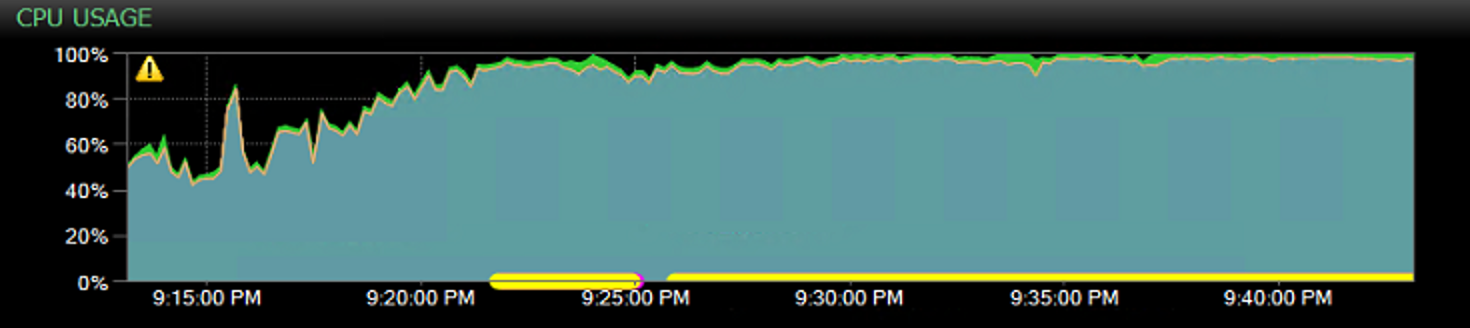

This customer was struggling with a peak load that was in crisis: extremely high waits on IO resources, expensive key lookups, and high CPU relating from traffic flowing in that was prepared ad-hoc T-SQL. The server was falling behind and getting more and more blocked as peak traffic continued on:

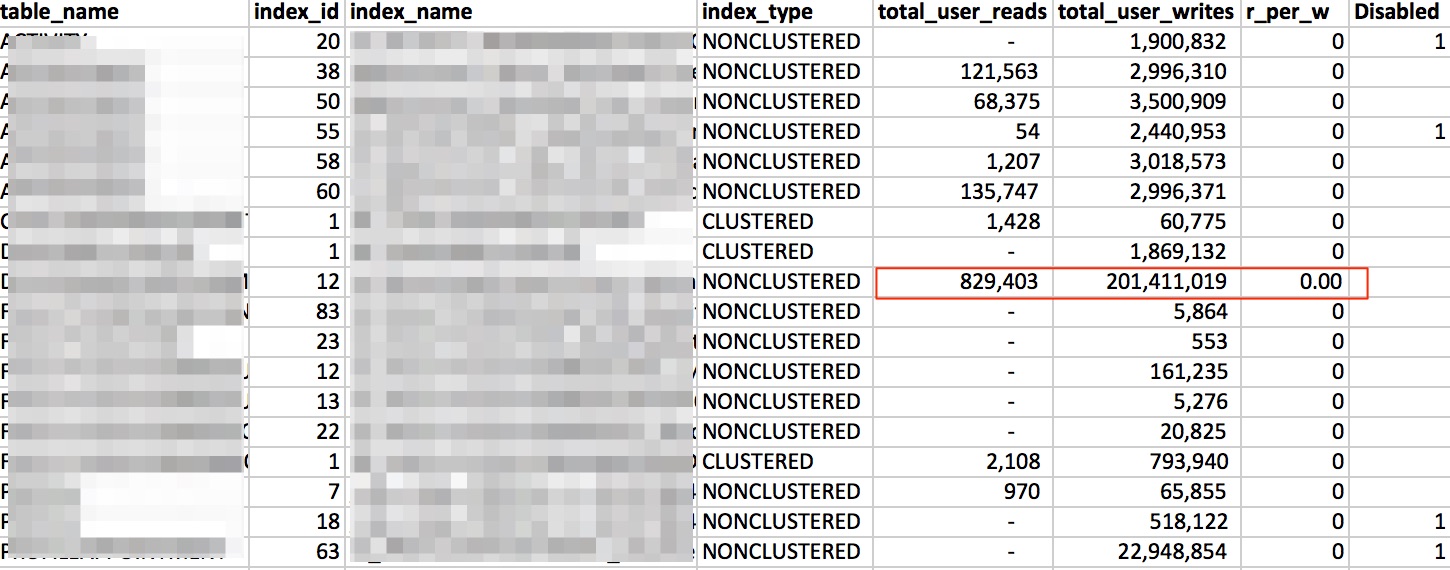

While the client's DBA was looking to add indexes for some key tables to assist in improving the queries addressing the ad hoc SQL code, I took a different approach: removing any indexes that were on the most-heavily trafficked tables, and whose updates were getting in the way of improving the duration and IO hit for each query coming into the system. Using the logic behind the queries in my previous articles on index usage, I was able to immediately identify the four candidates I marked as disabled = 1 below:

These were all indexes that were heavily biased toward not being used by users' queries, and therefore a substantial drag on performance. Three of the four fell into the earlier-described modified_datetime issue for their data warehouse team. The remaining index had the telltale signs of being created directly on the basis of a missing index hint (with the naming convention of DTA%, which is the default for index hints from the missing index Dynamic Management Objects that also serve as the backbone for the Database Tuning Advisor (a.k.a DTA).

I reached out to the data warehouse team only to find that they’re not performing data warehouse loading directly against the production database; rather, that team is restoring the previous day’s backup and then performing the incremental loading process. That explained the lack of reads against those indexes. Meanwhile, every underlying modification to the index was being recorded. The updates of all these extraneous indexes was adding IO and latency to what was already an overwhelmed instance. I had even considered disabling the index I boxed in red above with 829K reads because when balanced with the IO overhead of maintaining the index (201M writes) the effective amount of reads per write was still a rounding error.

One of the strongest assets for any data professional is the ability to remain calm when under pressure. There were thousands of clients struggling with extremely slow user experiences, and every minute that ticked by led to more inconvenienced customers. Disabling indexes requires an exclusive schema lock, and, with the amount of load and blocking that was occurring, there were some stressful minutes as the individual ALTER INDEX

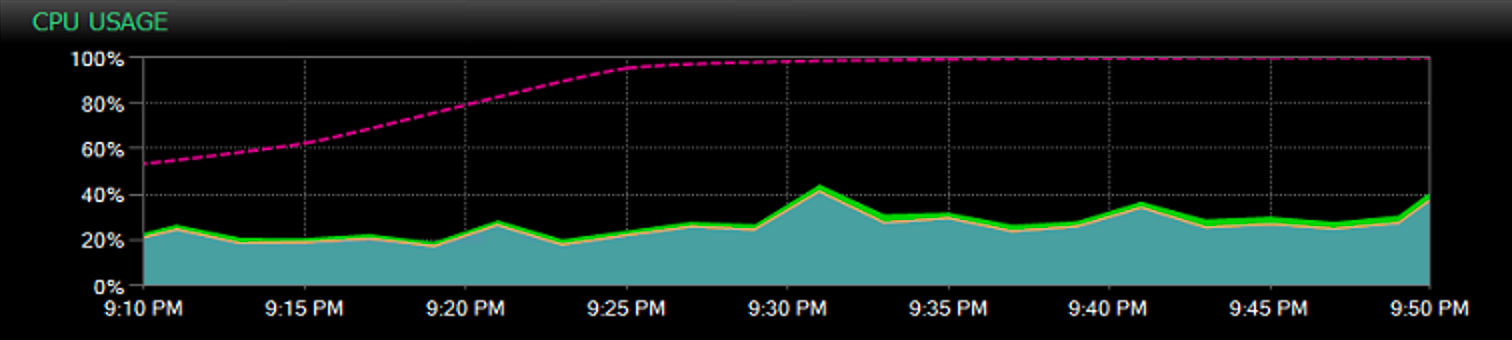

There was enough improvement that I felt it important enough to share the benefits. The end users were seeing queries taking in excess of 50ms or longer to complete with these indexes enabled. Afterward, that dropped to less than 3ms. Furthermore, where they saw extreme levels of disk/IO waits before the change, afterward the scale of waits dropped significantly and were replaced with common CX_PACKET waits, which signify parallelism and are typically benign. It actually pointed to better throughput, and then we started to see the blocks count drop, queue length dwindle, and a return to not only normal peak load, but better than normal peak load. (The CPU graph below shows the improvement from the previous day’s near 100% CPU crisis.)

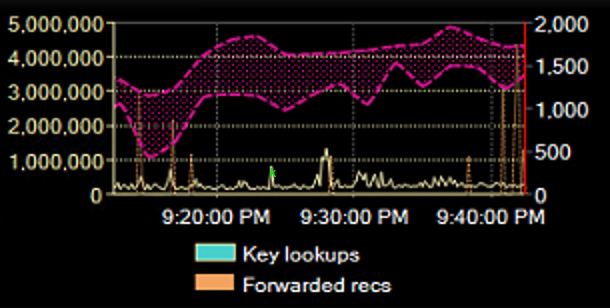

What really surprised me was that this action also knocked down those expensive key lookups at a rate that was just shy of miraculous. Key lookups were averaging a rate of 4 million/second; after the change they dwindled to acceptable limits as the indexes became disabled. The following graph shows what the previous day’s levels of key lookups per second were (shaded range):

Conclusion

While indexes are critical for a high-performing database, they are not a cure-all. I propose taking a minimalist approach to index creation: Always keep in the back of your mind the fact that every index comes with overhead. Taking a small amount of time each month to review index usage is also time well spent as a preventative measure. This should serve as a reminder of how important it is to have an understanding of how the databases are being used internally.

As for those indexes created for the data warehouse team and never used in production? By leaving them disabled, and not dropping them, it allows for the data warehouse team to enable them and rebuild them before their ETL process. They receive the full benefit of the indexes, but the indexes do not create overhead for the normal transactional processing happening in production.